Notes On Optimization On Stiefel Manifolds

.SummaryThis paper provides an introduction to the topic of optimization on manifolds. The approach taken uses the language of differential geometry, however,we choose to emphasise the intuition of the concepts and the structures that are important in generating practical numerical algorithms rather than the technical details of the formulation. There are a number of algorithms that can be applied to solve such problems and we discuss the steepest descent and Newton’s method in some detail as well as referencing the more important of the other approaches.There are a wide range of potential applications that we are aware of, and we briefly discuss these applications, as well as explaining one or two in more detail.

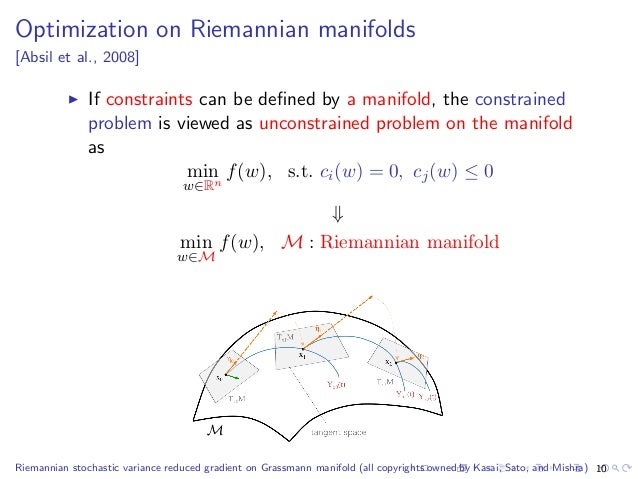

On minimization on Stiefel manifolds. Optimization problems on Stiefel manifolds appeared in the book of Helmke and Moore. The Topology of Stiefel Manifolds, London Mathematical Society, Lecture Notes Series 24, Cambridge University Press, Cambridge. Google Scholar. Luenberger, 1973. Optimization On Manifolds: Methods And Applications 3 produces a sequence (x k) k≥0 in M that converges to x∗ whenever x 0 is in a certain neighborhood, or basin of attraction, of x∗.As in classical optimiza. Can we solve above optimization using. On principle bundles these notes are. Tagged linear-algebra matrices grassmannian stiefel-manifolds or ask your own.

AbstractWe consider the problem of sampling from posterior distributions for Bayesian models where some parameters are restricted to be orthogonal matrices. Such matrices are sometimes used in neural networks models for reasons of regularization and stabilization of training procedures, and also can parameterize matrices of bounded rank, positive-definite matrices and others. In Byrne & Girolami authors have already considered sampling from distributions over manifolds using exact geodesic flows in a scheme similar to Hamiltonian Monte Carlo (HMC). We propose new sampling scheme for a set of orthogonal matrices that is based on the same approach, uses ideas of Riemannian optimization and does not require exact computation of geodesic flows. The method is theoretically justified by proof of symplecticity for the proposed iteration. In experiments we show that the new scheme is comparable or faster in time per iteration and more sample-efficient comparing to conventional HMC with explicit orthogonal parameterization and Geodesic Monte-Carlo.

We also provide promising results of Bayesian ensembling for orthogonal neural networks and low-rank matrix factorization. In the paper we consider the problem of sampling from posterior distributions for Bayesian models where some parameters are restricted to be orthogonal matrices. Examples of such models include orthogonal recurrent neural network (Arjovsky et al., ), where orthogonal hidden-to-hidden matrix is used to facilitate problems with exploding or vanishing gradients, orthogonal feed-forward neural network (Huang et al., ), where orthogonal matrices in fully-connected or convolutional layers give additional regularization and stabilize neural network training. Besides, orthogonal matrices can be used in parameterizations for matrices of bounded rank, positive-definite matrices or tensor decompositions. It is known that a set of orthogonal matrices forms a Riemannian manifold (Tagare, ). From optimization point of view, using the properties of such manifolds can accelerate optimization procedures in many cases.

Notes On Optimization On Stiefel Manifolds And Marine

Examples include optimization w.r.t. Low-rank matrices (Vandereycken, ) and tensors in tensor train format (Steinlechner, ), K-FAC approach for training neural networks of different architectures (Martens & Grosse,; Martens et al., ) and many others. Hence, it is desirable to consider the Riemannian manifold structure of orthogonal matrices within sampling procedures.

One of the major approaches for sampling in Bayesian models is Hamiltonian Monte Carlo (HMC) (Neal et al., ). It can be applied in stochastic setting (Chen et al., ) and can consider Riemannian geometry (Ma et al., ). However, application of these methods for sampling w.r.t.

Orthogonal matrices requires explicit parameterization of the corresponding manifold. In case of quadratic matrices one possible choice of unambiguous parameterization is given in (Lucas, ). However, it requires computing of matrix exponential which is impractical for large matrices.

Another option is to consider parameterization Q = X ( X T X ) − 1 / 2, where X is arbitrary possibly rectangular matrix. This parameterization is non-unique and in practice, as we show in this paper, could lead to slow distribution exploration. In this paper we propose a new sampling method for Bayesian models with orthogonal matrices. Here we extend HMC approach using ideas from Riemannian optimization for orthogonal matrices from (Tagare, ). In general outline the main idea of the proposed method is the following.

Basic Riemannian HMC approach (Girolami & Calderhead, ) supposes introduction of auxiliary momentum variables that are restricted to lie in tangent space for the current distribution parameters. Given some transformation of these parameters we consider vector transport transformation that maps momentum variables to tangent space for the new parameter values.Our method is an extension of previously known Geodesic Monte-Carlo (Geodesic HMC, gHMC) (Byrne & Girolami, ). The major difference is that step along the geodesic is replaced with retraction step which is much cheaper in terms of computation.We prove that the proposed transformation of parameters and momentum variables is symplectic – a sufficient condition for correctness of HMC sampling procedure.

We show in experiments that the proposed sampling method is way more sample-efficient than standard HMC with parameterization Q = X ( X T X ) − 1 / 2. This is true both for non-stochastic and stochastic regimes. We also consider Bayesian orthogonal neural networks for MNIST and CIFAR-10 datasets and show that Bayesian emsembling can improve classification accuracy. For the sake of generality we consider low-rank matrix factorization problem and also show that Bayesian ensembling here can improve prediction quality. Suppose we want to sample from distribution π ( θ ) ∝ exp ( − U ( θ ) ) which we know only up to normalizing constant.

For example, we may want to sample from posterior distribution of model parameters given data, i.e. U ( θ ) = − log p ( D a t a ∣ θ ) − log p ( θ ). In this situation we can resort to Hamiltonian Monte-Carlo approach that is essentially a Metropolis algorithm with special proposal distribution constructed using Hamiltonian dynamics. Let’s consider the following joint distribution of θ and auxiliary variable r:π ( θ, r ) ∝ exp ( − U ( θ ) − 1 2 r T M − 1 r ) = exp ( − H ( θ, r ) ).The function H ( θ, r ) is called Hamiltonian. Then for sampling from π ( θ ) it is enough to sample from the joint distribution π ( θ, r ) and then discard auxiliary variable r. As joint distribution factorizesπ ( θ, r ) = π ( r ) π ( θ ∣ r ),we can sample r ∼ N ( 0, M ) and then sample θ from π ( θ ∣ r ). To do this we simulate the Hamiltonian dynamics:d θ d t = ∂ H ∂ r = M − 1 r,(1)d r d t = − ∂ H ∂ θ = ∇ θ log π ( θ ).(2)It can be shown that solution of these differential equations preserves the value of Hamiltonian, i.e.

H ( θ ( t ), r ( t ) ) = c o n s t. Hence we can start from r ( 0 ) = r and θ ( 0 ) equaling to previous θ, take ( θ ( t ), r ( t ) ) from some t as new proposal and accept/reject it using standard Metropolis formula. Since H ( θ ( t ), r ( t ) ) = H ( θ ( 0 ), r ( 0 ) ) the new point will be accepted with probability p accept = 1. In practice differential equations - cannot be integrated analytically for arbitrary distributions π ( θ ). However, there exist a special class of numerical integrators for Hamiltonian equations which are called symplectic.

Such methods are known to approximately conserve Hamiltonian of the system and produce quite accurate solutions (Hairer, ). The most popular symplectic method for HMC is Leapfrog. One iteration of this method looks as follows:r n + 1 2 = r n + ε 2 ∇ θ log π ( θ n ),(3)θ n + 1 = θ n + ε M − 1 r n + 1 2,(4)r n + 1 = r n + 1 2 + ε 2 ∇ θ log π ( θ n + 1 ).(5)Since numerical methods introduce some error, we need to calculate Metropolis acceptance probability using old and new Hamiltonian:ρ = exp ( H ( θ old, r old ) − H ( θ new, r% n e w ) ).@xsect.